This is the sequel to the previous article defining ultrafilters and establishing basic properties thereof. Again, the proof of Hindman’s theorem is from Imre Leader’s lecture course on Ramsey theory.

It seems initially paradoxical that ultrafilters are actually useful. After all, it is impossible to explicitly describe a non-principal ultrafilter, as the proof of their existence relies on the axiom of choice. Nevertheless, they have found many applications throughout mathematics, especially in topology and Ramsey theory.

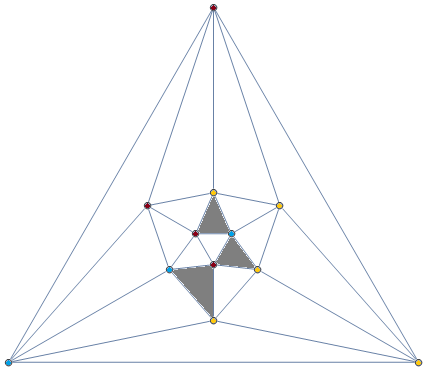

There is a hierarchy of filters with increasingly strong properties:

- Ordinary filters (useful in topology to generalise the notion of a sequence to apply to spaces which are not first-countable; originally, nets were used instead, but they have been largely obsoleted by filters)

-

Ultrafilters

- Non-principal ultrafilters

- Idempotent ultrafilters

- Minimal idempotent ultrafilters

Principal ultrafilters are not particularly interesting or useful, so we shall begin by seeing what we can achieve when armed with a non-principal ultrafilter. Deeper in the article, we shall explore the applications of idempotent and minimal idempotent ultrafilters.

Elections

When there are only two competitors in a democracy (and the electorate has an odd finite cardinality), it is rather trivial to determine the victor. Specifically, the following procedure should be applied:

-

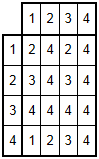

Each person in the electorate ranks the political parties.

- The votes are aggregated, and the party with the most votes wins.

For instance, in the 2008 US election, Barack Obama won with 69498516 votes, whereas the runner-up John McCain had 59948323 votes. Here’s a gratuitous infographic:

This simple majority voting system possesses many desirable qualities (stated in a way to generalise to n political parties):

- Non-dictatorship: No single person has total control over the election.

- Universality: The votes made by the electorate should deterministically determine a total ordering of political parties, the outcome.

- Independence of irrelevant alternatives: If S is a subset of the set of political parties, then the total ordering restricted to S in the outcome should depend only on the total ordering restricted to S in the preferences of each voter.

- Monotonicity: If some subset of the electorate increase the position of a party in their preferences, then this does not decrease the position of that party in the outcome.

- Non-imposition: Any of the n! outcomes (total orderings on the political parties) can be achieved.

Someone called Arrow famously proved that it is impossible to create a similarly ideal voting system when there are three or more political parties. For instance, it is very easy for two parties to have the same number of votes, so the naive majority idea does not generalise.

Hence, in the United Kingdom where there are more than two political parties, it is impossible for any of these sophisticated alternative vote systems to possess all of the desired qualities. Does this mean that we have no hope whatsoever?

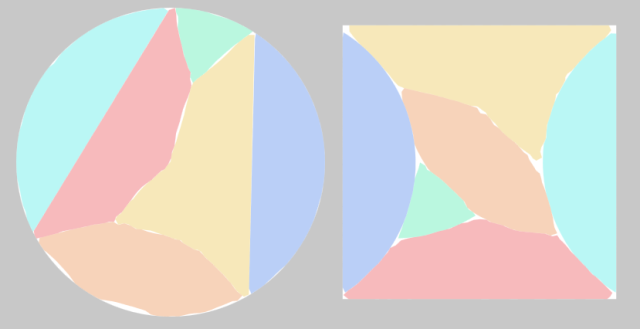

Actually, no: there is a subtle caveat, namely that Arrow’s impossibility theorem only holds when the electorate is finite. Otherwise, it breaks down spectacularly. Specifically, we have the following voting system:

- Let U be a non-principal ultrafilter on the electorate (set of voters).

-

Each voter selects his or her preferred outcome (out of n! possibilities).

- Precisely one of the n! possible outcomes is favoured by a measure-1 subset of the electorate, with respect to U. This is the overall outcome.

(If a principal ultrafilter were used instead, we would precisely have a dictatorship.)

In conclusion, we have a beautiful voting system where there are no dictators, and no ‘extremist groups’ of finite cardinality can control the outcome, either. Unfortunately it’s non-constructive by virtue of depending on the axiom of choice, but never mind. Non-principal ultrafilters save the day!

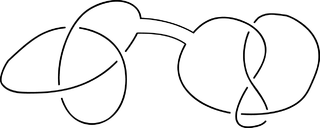

Ramsey’s theorem

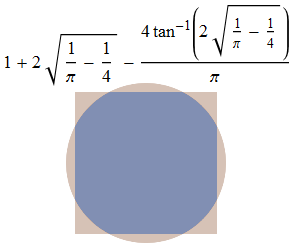

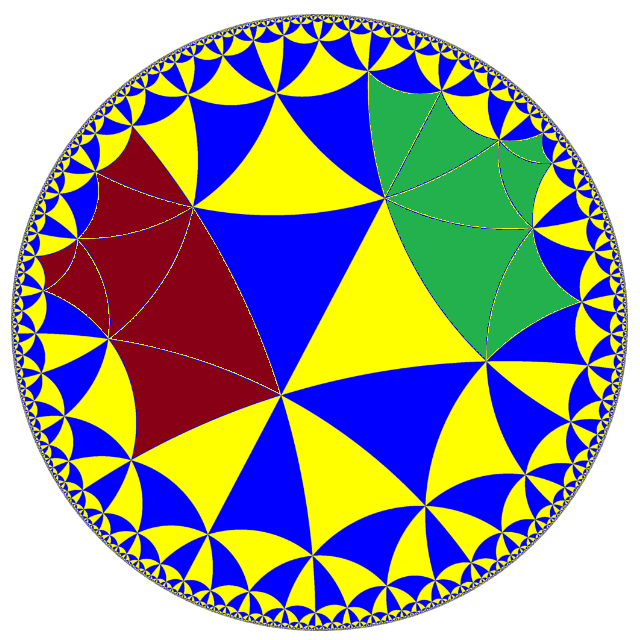

It’s possible to prove the infinite variant of Ramsey’s theorem (that any k-colouring of the edges of a countably infinite complete graph contains a monochromatic countably infinite complete induced subgraph) using elementary methods. However, there is a proof using ultrafilters which seems slightly cleaner.

In particular, we choose a non-principal ultrafilter U and choose the colour c satisfying (∀U x ∀U y : (x, y) is coloured with c), and intend to find a monochromatic infinite clique of that colour. We construct this sequentially, choosing a vertex x_n with the property that (∀U y : (x_n, y) is coloured with c) and such that the edges joining x_n to each of the previously-selected vertices {x_1, x_2, …, x_(n−1)} are all the correct colour. Repeat ad infinitum.

Now that we have an algorithm, we now need to prove that it actually works. How do we know that we can always choose such an x_n? Well, x_n must satisfy precisely the following conditions:

-

∀U y : (x_n, y) is coloured with c;

- (x_1, x_n) is coloured with c;

- …

- (x_(n−1), x_n) is coloured with c.

For each of these conditions considered in isolation, a measure-1 set of vertices have the desired property. There are finitely many such conditions, so their intersection also has measure 1, and in particular is non-empty. Hence, the inductive step of the proof is complete.

This proof also generalises in an obvious way to prove Ramsey’s theorem for hypergraphs (where the ‘edges’ are r-subsets instead of 2-subsets, for some fixed r). By comparison, proving the hypergraph Ramsey theorem using elementary methods involves a clever induction on the value of r.

The finite version of Ramsey’s theorem can then be deduced from the infinite version using a compactness argument. Unlike the finitary inductive proof of Ramsey’s theorem, however, this does not provide an explicit bound on how large a complete graph must be to contain a monochromatic subset of size n.

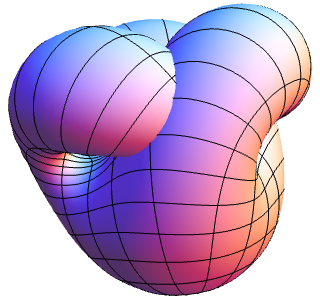

Hindman’s theorem

We’ve seen a basic application of ultrafilters in Ramsey theory, but it is only slightly easier than the finitary proof and doesn’t really justify developing the theory of ultrafilters. By comparison, the next result (Hindman’s theorem) is very deep, and the most elegant proof relies on the existence of idempotent ultrafilters.

Hindman’s theorem is a massively strengthened version of Schur’s theorem, one of the first results in Ramsey theory:

Schur’s theorem: If we finitely colour the positive integers, we can find x and y such that x, y and x+y are all the same colour.

This is really straightforward to prove. Specifically, we colour the edge (a, b) of the graph on the positive integers according to the colour of |a − b|. Then, by Ramsey’s theorem, we can necessarily find a, b and c such that (a, b), (b, c) and (c, a) are all the same colour (a monochromatic triangle). The result follows immediately.

A strict generalisation is Folkman’s theorem, which states that this is possible with arbitrarily many positive integers:

Folkman’s theorem: If we finitely colour the positive integers, we can find (for any n) integers {x_1, x_2, …, x_n} such that the 2^n − 1 finite sums are all the same colour.

This is not too surprising. The next generalisation is.

Hindman’s theorem: If we finitely colour the positive integers, we can find integers {x_1, x_2, x_3, …} such that all finite sums are the same colour.

So, how do we prove this? It actually follows rather quickly from the existence of an idempotent ultrafilter, U, which we established during the previous article. When we finitely colour the positive integers, precisely one of the colour classes, c, has measure 1 with respect to our idempotent ultrafilter U.

Suppose we have a set S_n = {x_1, x_2, …, x_n} with the property that ∀U y : (all finite sums of {x_1, x_2, …, x_n, y} are coloured with c). Consequently, for all 2^n − 1 finite sums z of distinct elements of S_n, we have the property ∀U y : (z + y is coloured with c). By idempotence, this gives ∀U x : ∀U y : (z + x + y is coloured with c). Taking the intersection of these 2^n − 1 new statements (one for each z) together with the 2^n − 1 old statements ∀U x : (z + x is coloured with c), we obtain the following:

-

∀U x : ∀U y : (all finite sums of {x_1, x_2, …, x_n, x, y} are coloured with c).

So, simply let x_(n+1) be such an x (guaranteed to exist since measure-1 sets are non-empty) and we obtain a proper superset S_(n+1) with the property. Hence, by induction we are done.

Addition and multiplication

Hindman’s theorem has a multiplicative corollary, that we can find an infinite monochromatic set such that all finite products are the same colour (proof: consider powers of two and apply regular Hindman’s theorem). Indeed, a colour class which is measure-1 with respect to a multiplicatively idempotent ultrafilter would have this desired property. The existence of ultrafilters which lie in the topological closures of the sets of additively and multiplicatively idempotent ultrafilters means that we can actually satisfy the additive and multiplicative versions with the same colour class:

- Double Hindman’s theorem: We can find sets S = {x_1, x_2, …} and T = {y_1, y_2, …} such that all finite sums of elements of S and finite products of elements of T are the same colour.

Similarly, there is a double van der Waerden’s theorem. The proof using ultrafilters is the easiest proof, although this can also be achieved using Szemeredi’s theorem (the density analogue of van der Waerden). The proofs of both double Hindman and double van der Waerden (which, if I remember correctly, can be made to apply simultaneously to the same colour class in some grand generalisation!) make use of minimal idempotent ultrafilters. These are idempotent ultrafilters belonging to a minimal ideal in the semigroup (βN, +), and their existence requires another application of Zorn’s lemma beyond what was necessary to prove the existence of bog-standard idempotent ultrafilters.

Other applications of idempotent ultrafilters are given in this MathOverflow discussion.

Ultrafiltered milk

The concept of ultrafiltered milk is rather amusing, to me at least, since (non-principal) ultrafilters are inherently non-constructive and therefore very unlikely to be useful in agriculture. Indeed, it is an unfortunate fact that ultrafiltered milk does, in fact, have absolutely no connection with ultrafilters whatsoever, and it is a mere etymological coincidence.

I assert that the world would be a much better place if ultrafiltered milk actually involved ultrafilters in the production process…