This is an atypical post, being chiefly about the history of a rather obscure computer that was built in 1960 out of repurposed PDP parts, but it needs to be written somewhere lest it be forgotten. It has been referred to in different places as a ‘PDP-3’, ‘PDP-2½’, or by the name ‘CASINO’; based on the sources that I’ve read, these names all refer to the same unique computer designed by Charles L. Corderman.

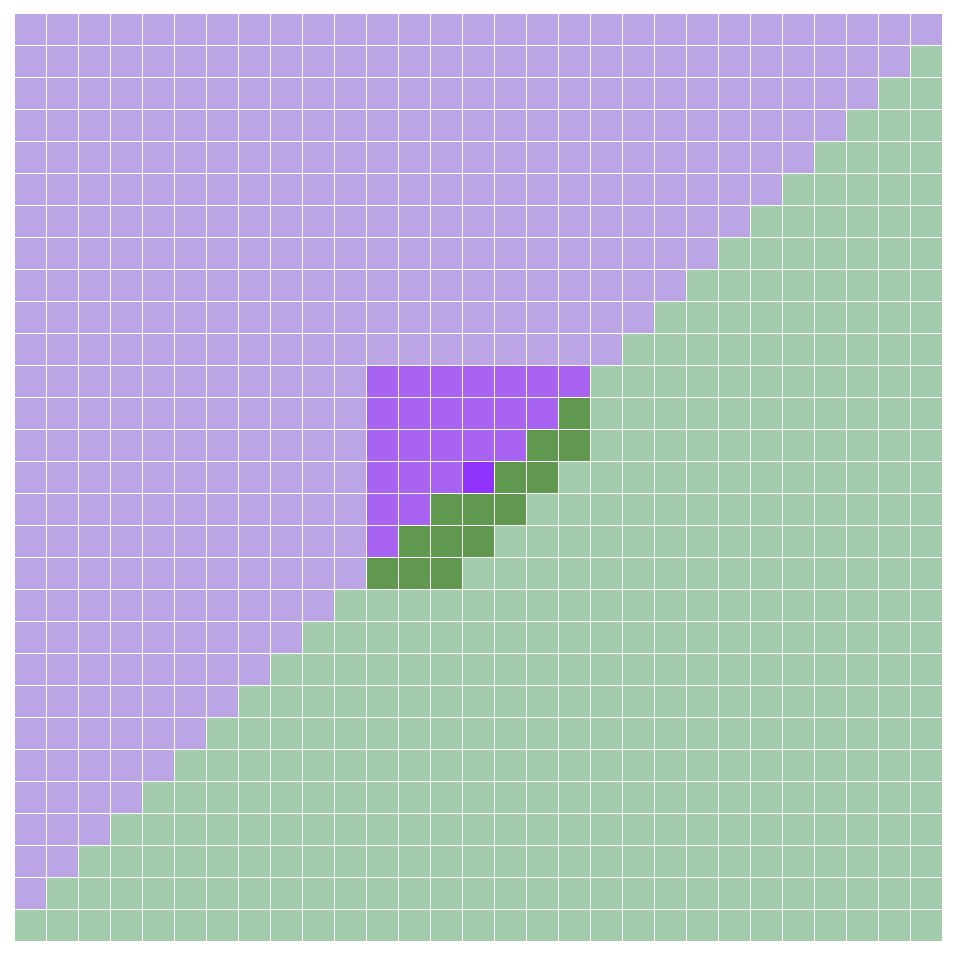

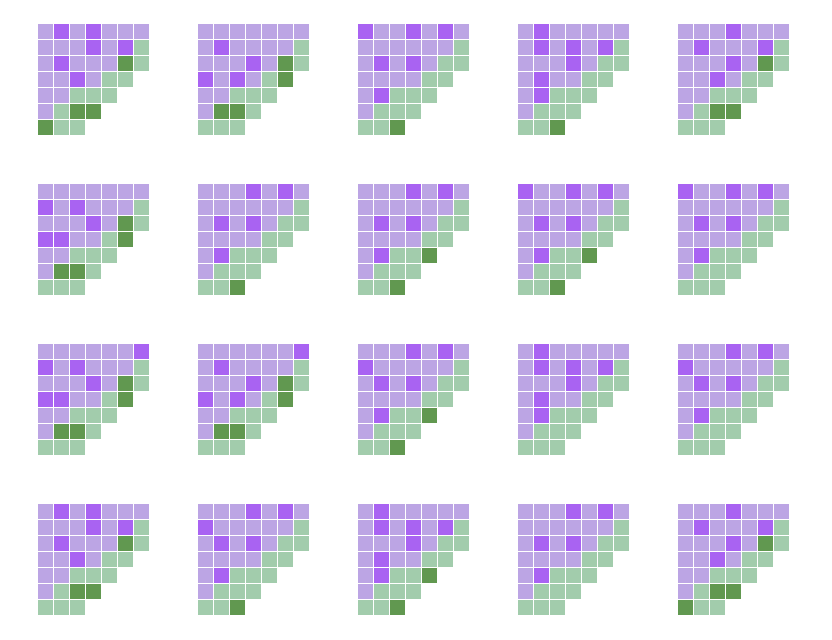

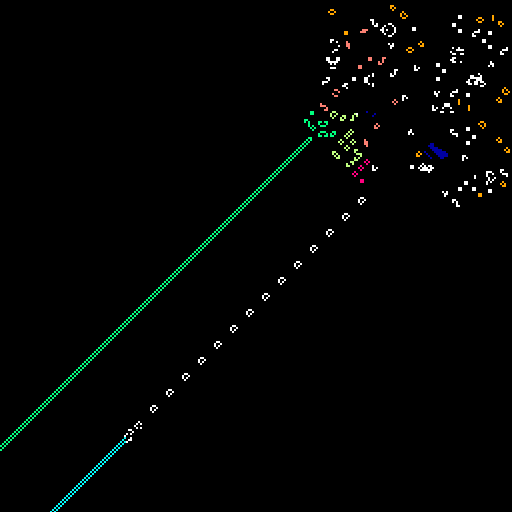

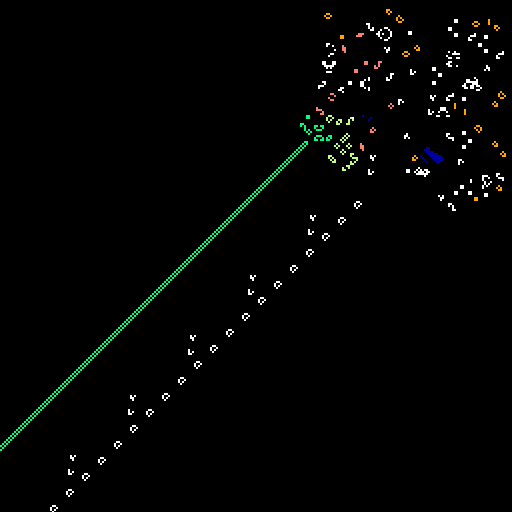

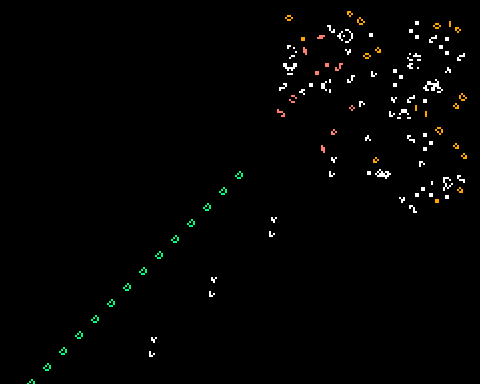

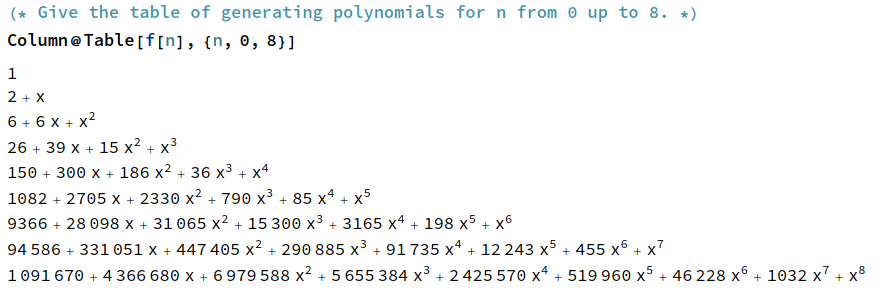

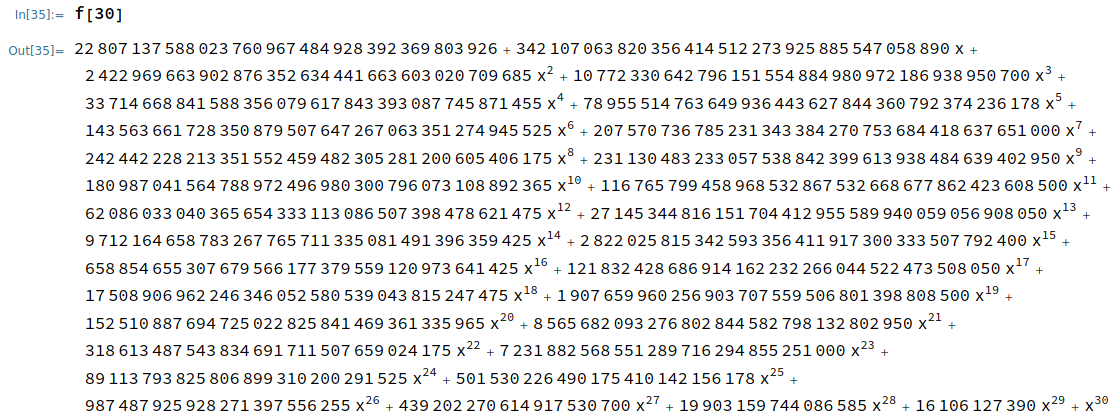

It was the computer with which Corderman discovered the switch engine in 1971, the basis of all infinite-growth patterns that have naturally arisen from random soups in Conway’s Game of Life (as opposed to being deliberately engineered, such as Gosper’s glider gun). This discovery was first published in Volume 4 of Bob Wainwright’s LIFELINE newsletter, reproduced below:

Rich Schroeppel posted a terse note to a mailing list in July 1992 revealing more information about how Corderman discovered the switch engine, namely that it was the result of systematically running polyominos on an unusual computer.

[Corderman] had an oddball computer

configuration at a medium sized company in the

Boston suburbs. He was systematically tracking

all the polyominos, and eventually got up to

the Corderman-omino. He saw the puffer, and

word eventually reached us at MIT. I don’t

remember who found the blinker that stabilizes

the puffer, or if it was Corderman or an MIT

person.

The unique smallest polyomino which evolves into the switch engine has 9 cells, so this is most probably (but not certainly) the ‘Corderman-omino’ to which Schroeppel refers:

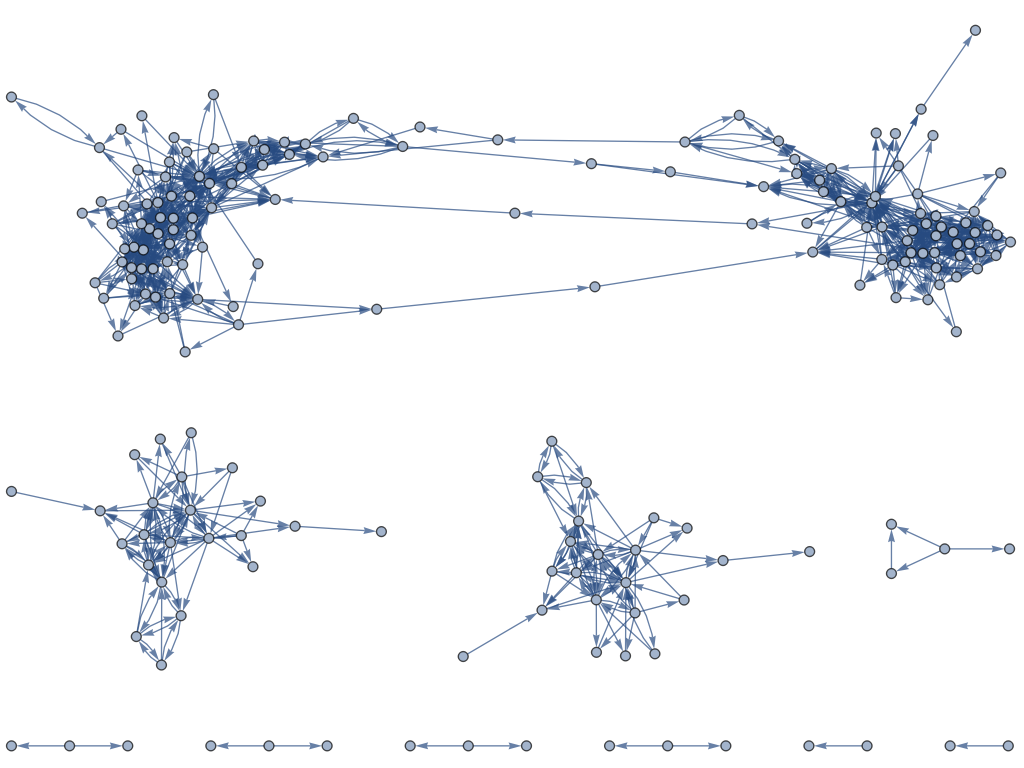

Much more interesting is the ‘oddball computer’ with which Corderman performed this search. Bill Gosper replied to Schroeppel’s post, detailing much more of its history, including the fact that Corderman designed and built the machine with two collaborators:

His computer was an absolutely unique (37 bits, no parity) large mainframe built and programmed by only three people, two of whom were gone when we met him. The design was begun by DEC, intended to be their PDP-2 or PDP-3, challenging IBM’s 709.

G.D. Searle (pharmaceuticals) of Waltham staked their data processing hopes on DEC’s delivery of the machine, and when DEC abandoned it, Searle bought the plans and the parts, and Corderman and his pals completed (and radically eccentrized) it.

The programming environment was *entirely* menu-driven via a light pen–an incredibly awkward pointing device consisting of little but a phototransistor that the mainframe had to query after displaying each dot. (This was late 1971 or so–years before mice and menus, except maybe Engelbart’s.)

No one had a memory big enough, nor a display fast enough for a bitmap raster.

During the demo, the Flexowriter (machine’s only keyboard) remained powered off, except briefly when he light penned it into printing a lowercase “r”, just to prove it worked.

Zero input keystrokes; one output keystroke. He had menus all the way down to twiddling individual memory bits. The screen phosphor, by the way, was magenta.

I believe word of his “switch engine” was brought to us

personally by Wainwright, who entrained us for the Corderman segment of his New England Life tour.I believe Corderman said he was exhausting the decominoes when he noticed several iterations of an almost viable switch engine. (His program “chased” live nongliders.) He then cultivated the sprout until he found the two variants. (The omino does not recur in the period, and may need to be rediscovered.)

Back in May 2023 I tried to see if there was any record online of the existence of this marvellous machine. Eventually I found a 1997 Usenet post by Max Ben-Aaron (who worked at the aforementioned company) which corroborates Gosper’s story and gives a name to this computer — ‘Casino’:

In the late 60’s & early 70’s I worked for a company (Medidata, later Searle Medidata) which started life as a not-for-profit spin-off from Lincoln Lab. (as I have heard), called American Science Institute. The chief engineer, Ed Rawson was a friend of Dec’s Olsen and he managed to get hold of the modules used for the prototype PDP-2 which never reached the market. ASI used them to build their own machine (designed, I believe, by Chuck Corderman) which they called “Casino” and was sometimes jocularly referred to as a PDP-2 1/2. Casino was noteworthy for having, very early in trhe [sic.] game, graphics capabilities. It also had some special terminals which had labels that cannot be

repeated on this (family) newsgroup.

‘Chuck’ is an American nickname for ‘Charles’ so the story checks out.

I did some digging, and almost certainly ‘American Science Institute’ is a misremembering of the Scientific Engineering Institute of Waltham, MA, which (according to a book by Gordon Bell) built a PDP-3 in 1960 which later ended up in a museum in Oregon in 1974. I also found a letter referring to ‘CASINO’, this time in block capitals:

There’s a publication in a medical journal by Edward B. Rawson (the person who wrote that letter) published from the SEI in 1968, and soon after in that same year it had rebranded to Searle Medidata (now a for-profit company) and we see patent applications by Rawson. Crucially, the SEI and Searle Medidata had exactly the same postal address (140 Fourth Avenue, Waltham, MA) and both involved Rawson, so we can be pretty sure that the SEI became Searle Medidata. This also lines up precisely with Schroeppel’s description of a “medium sized company in the Boston suburbs”.

Gordon Bell, who wrote the remark about the PDP-3, was also privy to the various letters in that paper trail, and involved in establishing the DEC museum. So almost certainly the PDP-3 described by Bell is actually referring to CASINO itself, which would date its construction right back to 1960 (understandable, since that’s when the PDP-3 was going to be built anyway), coinciding with the lower bound of the 1960-1971 range. That would actually make a huge amount of sense: it would have been obsoleted by subsequent DEC machines by the late 1960s, so CASINO was no longer needed by Searle Medidata, and likely its creator (Corderman) didn’t want to see the machine go to waste so decided to repurpose it for running polyominoes in CGoL (because why not?). That would also explain why the other two coinventors of CASINO weren’t around at the time Gosper saw it: it was already eleven years old!

When I decided to search for more about the PDP-3 today, I saw that Lars Brinkhoff and ‘jnc’ had recently assembled a wiki page on CASINO. The authors had found the same online references that I did, along with another reference that I’d missed at the time: a 2009 book by Paul A. Suhler on the design of the Lockheed Blackbird. Conversely, the authors of that page are seemingly unaware that this was also the machine with which Corderman discovered the switch engine (unsurprisingly, as that can only be deduced from a 1992 e-mail that Bill Gosper posted in a private mailing list about cellular automata).

Brinkhoff later edited the page to explain the name CASINO — apparently an acronym for ‘Computer Able to select INternal Orders‘ — but no further references were included so I’ll have to ask Brinkhoff directly as to how he determined this information.

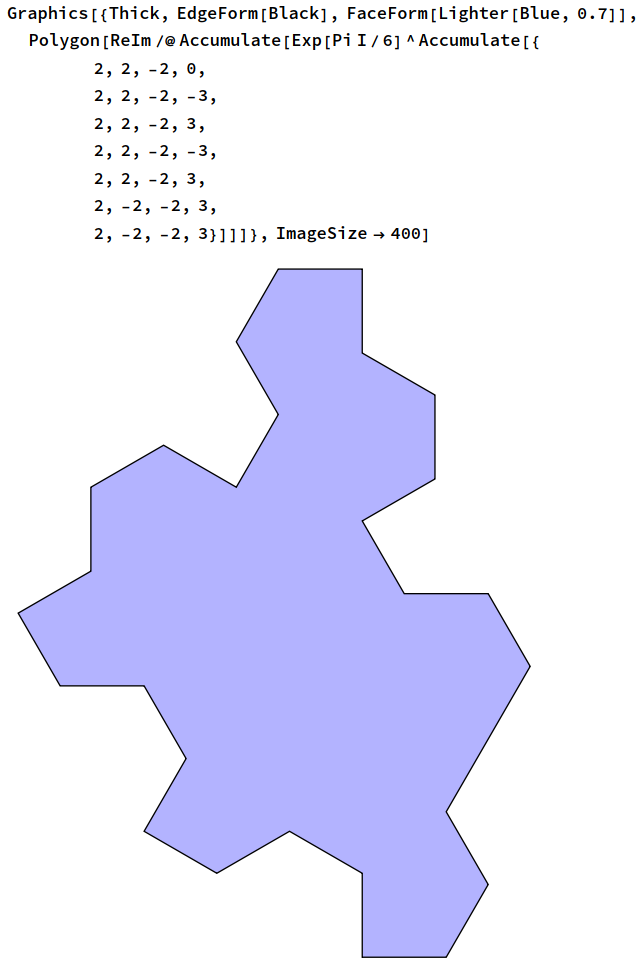

The Wikipedia article on the PDP series is consistent with the machine having been originally built for military aviation, mentioning that “the only PDP-3 was built from DEC modules by the CIA’s Scientific Engineering Institute (SEI) in Waltham, Massachusetts to process radar cross section data for the Lockheed A-12 reconnaissance aircraft* in 1960” and reports the word size as being 36 bits. It seems that 36 bits was the word size intended by DEC for their PDP-3 range (which was never built by DEC), but Corderman’s actual physical computer had (depending on which source you believe) 37- or 38-bit words.

*the same A-12 after which Elon Musk and Grimes named one of their children

A Google search for “scientific engineering institute” “waltham” also found a blog post written in January 2025 on the history of the PDP machines, including an excerpt about CASINO from Suhler’s book. This mentions that Corderman’s two collaborators were Jay Lawson and the aforementioned Edward B. Rawson (chief engineer at SEI), and that indeed the machine had significantly diverged from DEC’s original PDP-3 architecture:

The project was run like a homebrew computer project, with more emphasis on getting the machine and software to run rather than on making it well documented and easy to use. The design evolved so rapidly that when one of the engineers returned after a two-week absence, he didn’t recognize it. The design evolved away from the original PDP-3 architecture, and it came to be called CASINO.

Given that CASINO is rumoured to have unfortunately been destroyed, it is unlikely that we will ever know the full details of this remarkable machine…